Remember when facial filters were just simple overlays that barely stuck to your face?

Those days seem ancient now.

Today’s AR face tracking creates experiences so seamless that the line between digital and real continues to blur.

Behind this evolution lies deep learning technology that’s completely transformed how AR systems recognize and track human faces.

The Evolution of Facial Recognition in AR

Early AR facial systems relied on basic landmark detection.

They identified key points like eyes and mouth using simple geometric methods.

Results were passable but prone to errors when lighting changed or faces moved quickly.

Deep learning changed everything.

Modern neural networks can now identify and track facial features with remarkable precision, even in challenging conditions.

This advancement has opened doors to AR experiences that were once impossible to create.

How Deep Neural Networks Track Faces

Deep learning approaches facial recognition through specialized neural network architectures.

Convolutional Neural Networks (CNNs) excel at identifying facial features from visual data.

These networks process camera inputs through multiple layers, each extracting increasingly complex patterns.

Early layers detect simple edges and shadows.

Deeper layers combine these features to recognize specific facial structures.

The final layers assemble complete facial models that can be tracked in three-dimensional space.

This layered approach creates robust tracking that maintains accuracy even when faces are partially obscured.

Check out our homepage for more insights on implementing cutting-edge AR technologies.

Beyond Basic Recognition: Understanding Expressions

Modern AR doesn’t just track face position – it understands expressions.

Deep learning models can now identify subtle muscle movements that indicate emotions.

They differentiate between dozens of expressions from smiles to raised eyebrows.

This emotional intelligence enables AR systems to respond naturally to users’ facial cues.

Virtual characters can maintain eye contact or react to user expressions.

AR filters can transform based on whether you’re smiling, frowning, or winking.

These capabilities create significantly more engaging and interactive experiences.

Real-Time Performance Breakthroughs

The most impressive achievement in deep learning facial tracking is its speed.

Complex neural networks that once required powerful servers now run efficiently on mobile devices.

Optimized models can process facial data at 60 frames per second or higher.

This performance allows AR applications to maintain consistent tracking without noticeable lag.

Users experience smooth, responsive interactions that feel natural rather than computerized.

The technical obstacles that once made real-time facial AR challenging have largely been overcome.

Applications Transforming User Experiences

Deep learning facial tracking has enabled entirely new categories of AR applications.

Virtual try-on systems allow users to sample makeup, glasses, or jewelry with realistic positioning and lighting.

Video conferencing platforms use facial tracking to maintain eye contact between participants through avatar-based interactions.

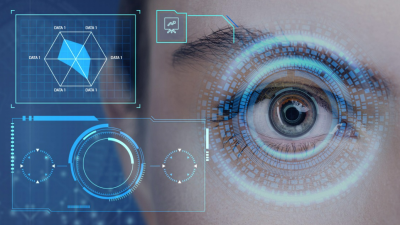

Security applications verify identity through facial recognition enhanced with liveness detection.

Entertainment apps create character animations driven by users’ actual facial expressions.

Educational tools visualize anatomical structures overlaid on users’ faces for medical training.

Each of these applications leverages the precision and responsiveness that deep learning provides.

Addressing Privacy and Ethical Considerations

The power of facial recognition brings important responsibilities.

User consent must be clearly obtained before facial data is processed.

Transparent data handling practices should be established and communicated.

Biometric information requires special protection compared to other data types.

Developers must consider the ethical implications of their facial tracking applications.

The industry continues to develop standards for responsible implementation of these technologies.

Technical Implementation Challenges

Despite significant advances, challenges remain in implementing deep learning facial tracking.

Diverse facial features across different ethnicities require inclusive training data.

Varying lighting conditions can still impact recognition accuracy.

Processing efficiency must be balanced against tracking precision.

Battery consumption remains a consideration for mobile AR applications.

Developers must carefully optimize their models for specific use cases and device constraints.

The Future Landscape of Facial AR

Deep learning facial tracking continues to evolve rapidly.

Research focuses on capturing increasingly subtle facial micro-expressions.

3D facial reconstruction from single camera inputs is becoming more precise.

Emotional state assessment is growing more sophisticated.

Integration with other sensory inputs creates multimodal AR experiences.

These advances promise even more convincing and useful AR applications in the near future.

Emerging Technologies Enhancing Facial AR

Several complementary technologies are combining with deep learning to enhance facial AR.

Dedicated neural processing hardware accelerates complex facial tracking algorithms.

Edge AI keeps sensitive facial data on users’ devices rather than in the cloud.

Transfer learning allows developers to customize pre-trained models for specific applications.

These technological partnerships are creating more capable and responsible AR systems.

Conclusion

Deep learning has fundamentally transformed AR facial recognition and tracking.

The precision, speed, and contextual understanding provided by neural networks have enabled AR experiences that feel genuinely magical.

As these technologies continue to mature, we’ll see increasingly sophisticated applications that respond naturally to our expressions and emotions.

The future of AR isn’t just about recognizing faces – it’s about understanding the people behind them.

Deep learning is making that understanding possible in real-time, on everyday devices, opening new frontiers for how we interact with augmented reality.