Emotion Recognition AI: The Future of Intelligent AR

Augmented reality (AR) has revolutionized the way humans interact with digital environments. Among the most transformative advancements in this field is the integration of Emotion Recognition AI into AR platforms. By understanding human emotions in real time, AR systems can adapt experiences dynamically, creating highly personalized interactions. This technology does not simply detect a smile or frown; it interprets subtle cues from facial expressions, voice tone, and even physiological indicators, offering insights that were previously accessible only through in-depth human observation.

The impact of Emotion Recognition AI in AR is multifaceted. From enhancing gaming experiences to improving remote collaboration, this technology bridges the gap between digital interfaces and human psychology. For instance, educational AR applications can detect frustration or confusion in students, prompting the system to adjust content delivery or provide additional guidance. In corporate settings, AR-enabled training modules can identify signs of engagement, stress, or fatigue, allowing organizations to tailor learning pathways in real time.

Understanding How Emotion Recognition AI Works

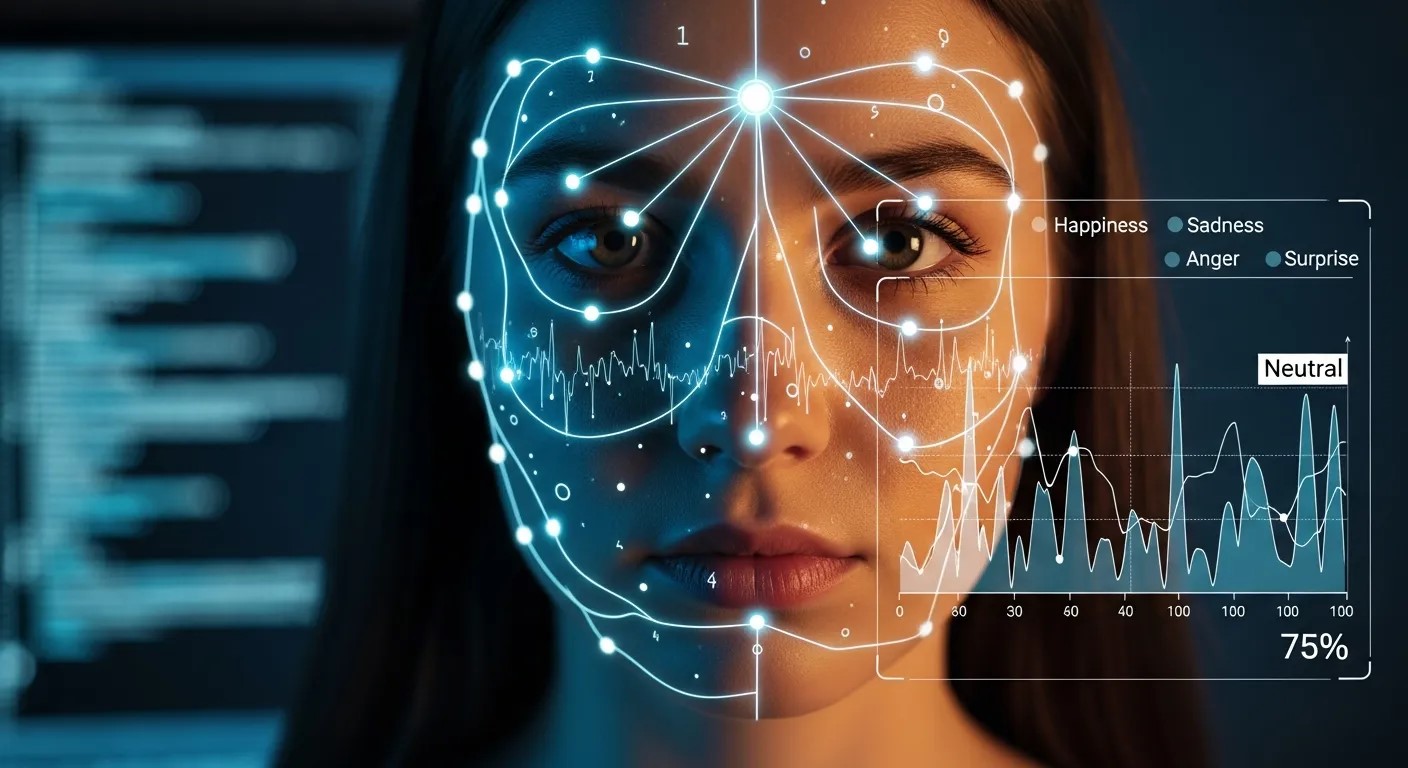

At its core, Emotion Recognition AI relies on advanced machine learning algorithms trained to analyze patterns in human behavior. Computer vision plays a central role, using cameras and sensors to capture facial micro-expressions. These micro-expressions, often imperceptible to the human eye, reveal emotions such as surprise, anger, happiness, or disgust. Complementing visual data, audio analysis examines vocal attributes like pitch, tone, and tempo, further enhancing the AI’s ability to interpret emotional states.

Integrating this capability into AR introduces unique challenges and opportunities. Unlike conventional screens or mobile devices, AR overlays interact directly with the user’s environment. This requires Emotion Recognition AI to operate seamlessly without causing distractions or cognitive overload. Modern systems achieve this by combining edge computing with cloud-based analytics, ensuring real-time responsiveness while maintaining user privacy.

One emerging area where this is particularly critical is in AR Quantum Computing experiments. Quantum-enhanced AR systems demand highly adaptive interfaces, and emotion-aware feedback allows participants to better engage with complex simulations. For instance, researchers using AR to visualize quantum states can have the system adjust visualizations based on stress levels or engagement, creating a more intuitive learning environment.

Transforming User Experiences in AR

The real power of Emotion Recognition AI lies in its ability to enhance user experiences. In retail, AR mirrors can assess a shopper’s emotional reaction to products and offer personalized recommendations, effectively blending digital and physical shopping. Similarly, entertainment applications can alter narratives in real time based on audience reactions, creating a deeply immersive experience.

Remote collaboration also benefits immensely. Traditional video conferencing often struggles to capture nuanced human emotions, leading to miscommunication. By integrating Emotion Recognition AI into AR meeting platforms, organizations can monitor engagement levels and adjust presentations accordingly. This has implications for productivity, team cohesion, and employee satisfaction. Notably, this is a step beyond conventional ROI Tracking for AR Campaigns, which focuses primarily on metrics like views, clicks, or session duration. Emotion-aware analytics provide a richer understanding of user engagement, translating into actionable insights for businesses.

The Psychological Dimensions of Emotion Recognition AI

Humans are inherently social creatures, and our interactions are guided by subtle emotional cues. When AR systems leverage Emotion Recognition AI, they tap into these cues to create experiences that resonate on a psychological level. Research in cognitive psychology indicates that real-time emotional feedback enhances learning, memory retention, and decision-making. In AR training modules, detecting frustration early allows the system to adjust task difficulty or provide supportive prompts, improving learning outcomes.

Moreover, emotional awareness in AR can reduce cognitive fatigue. By recognizing signs of stress or overload, systems can implement adaptive pacing, minimizing frustration and maintaining engagement. This aligns with insights from AI vs Human in Market Research, where understanding emotional responses has historically been key to predicting behavior. In AR environments, AI now assumes this role, offering scalable emotional intelligence across thousands of interactions simultaneously.

Ethical Considerations in Emotion Recognition AI

Despite its potential, the implementation of Emotion Recognition AI raises important ethical considerations. Privacy is paramount; AR systems must ensure that sensitive emotional data is processed securely and not misused. Transparent consent mechanisms and data anonymization are critical.

Bias in AI models is another concern. Emotion recognition systems trained primarily on limited demographic datasets may misinterpret emotional cues from underrepresented groups. Developers must adopt inclusive training practices and continually audit models to mitigate these risks. Failing to do so not only undermines trust but also contributes to AR Inequality, where certain populations may receive suboptimal experiences due to inaccurate emotional assessments.

Applications Across Industries

The integration of Emotion Recognition AI in AR is not limited to entertainment or corporate training. Healthcare applications, for instance, benefit from emotion-aware AR in therapeutic contexts. Patients with anxiety or PTSD can engage with controlled AR scenarios while the system monitors stress responses, adjusting difficulty or pacing to optimize therapy.

In retail and marketing, understanding customer emotions enables dynamic pricing strategies, personalized advertisements, and adaptive product displays. By quietly analyzing facial and vocal cues, businesses can enhance engagement without overt intrusion. This is complementary to emerging strategies in AI Conversational Commerce, where chatbots and AR assistants respond intelligently to consumer sentiment, offering tailored suggestions and building rapport.

Education is another sector witnessing transformation. Emotion-aware AR lessons can detect student boredom or confusion, prompting instant feedback or alternative explanations. By aligning instructional design with emotional engagement, educators can foster deeper learning and retention.

Finally, in collaborative AR platforms, emotion recognition can enhance team dynamics. By detecting disengagement or stress in participants, systems can propose breaks, reassign tasks, or offer support, improving overall project outcomes. This is particularly valuable in contexts where AR Autonomous Agents assist human teams, allowing AI collaborators to make informed decisions that consider human emotional states.

Technical Integration of Emotion Recognition AI in AR

Integrating Emotion Recognition AI into AR platforms requires careful coordination between hardware, software, and AI models. Modern AR devices, whether smart glasses, headsets, or mobile AR apps, come equipped with high-resolution cameras, depth sensors, and motion tracking capabilities. These sensors provide the raw data needed for emotion detection. Machine learning models then analyze this input in real time, interpreting subtle cues from facial expressions, micro-movements, and even voice patterns.

A key challenge in integration is latency. Emotion data must be processed almost instantaneously to influence AR experiences dynamically. Edge computing solutions are increasingly employed to reduce reliance on cloud processing and ensure real-time responsiveness. By analyzing data locally on the device, Emotion Recognition AI can adjust visual overlays, avatars, or interactive elements immediately, maintaining immersion without noticeable lag.

Another aspect of integration involves interoperability with existing AR software frameworks. Developers often rely on AR development kits to manage tracking, spatial mapping, and rendering. By layering emotion recognition algorithms on top of these frameworks, systems can dynamically adapt content according to user emotional states. This capability is particularly useful in scenarios like interactive storytelling, AR-assisted training, or customer experience simulations.

For example, in experimental projects that combine AR Quantum Computing simulations with emotion-aware interfaces, the AI adapts visualizations and complexity based on participant engagement levels. A student struggling with a quantum concept might see simplified models, while highly engaged users experience more advanced simulations. This creates a personalized learning curve that enhances understanding and retention.

Real-World Case Studies

Several industries have already begun implementing Emotion Recognition AI in AR environments, demonstrating its transformative potential.

Healthcare and Therapy

In therapeutic settings, AR applications equipped with emotion recognition capabilities are being used to treat patients with anxiety, PTSD, or social phobias. The system monitors emotional responses during exposure therapy, allowing therapists to tailor interventions more precisely. For instance, if a patient shows signs of heightened stress during an AR scenario, the AI can modify the environment’s intensity or introduce calming elements to prevent overwhelming the individual.

Retail and Marketing

Retailers are leveraging emotion-aware AR mirrors and apps to understand customer reactions to products. By analyzing facial expressions and engagement levels, stores can recommend items in real time, enhancing the personalization of the shopping experience. This approach complements strategies in AI Conversational Commerce, where digital assistants use sentiment analysis to guide purchasing decisions subtly and naturally.

Corporate Training

Corporate AR training modules benefit from Emotion Recognition AI by dynamically adjusting lesson difficulty based on participant engagement and emotional state. Employees who exhibit signs of confusion receive additional support, while highly engaged individuals are challenged with advanced tasks. Integrating emotion feedback with performance metrics ensures more effective learning outcomes and better ROI on training initiatives, extending beyond conventional ROI Tracking for AR Campaigns.

Collaborative AR Workspaces

Emotion-aware AR can enhance remote collaboration by detecting participant engagement and fatigue. In team projects involving AR Autonomous Agents, these AI assistants can redistribute tasks, suggest breaks, or adjust interaction styles based on the emotional cues of human collaborators. This reduces miscommunication, improves productivity, and fosters better interpersonal dynamics in virtual workspaces.

Addressing AR Inequality

While the benefits are clear, there are disparities in access to emotion-aware AR experiences. High-end devices with advanced sensors are often required to leverage full functionality, leaving certain populations at a disadvantage. This contributes to AR Inequality, highlighting the need for inclusive hardware and software design. Developers and policymakers must consider equitable distribution of AR resources to prevent a divide in technological access and opportunities.

Advanced Applications and Future Trends

The future of Emotion Recognition AI in AR is poised for exciting developments:

-

Adaptive Storytelling in Entertainment: Emotion-aware AR can monitor audience reactions during immersive storytelling experiences, altering narratives or character behavior to maintain engagement. This dynamic adaptation enhances emotional resonance and creates unique experiences for each viewer.

-

Education and Skill Development: AR-based learning platforms can utilize emotion feedback to personalize lessons. Detecting frustration, boredom, or excitement allows educators to modify content pacing and presentation style. Integrating such systems with AR Quantum Computing simulations can help students explore complex scientific concepts interactively and intuitively.

-

Workplace Productivity: Emotion-aware AR tools can identify signs of stress or disengagement, prompting timely interventions. When combined with collaborative AI agents, these systems can optimize team performance, support mental well-being, and improve overall organizational efficiency.

-

Market Research and Consumer Insights: Traditional market research relies on surveys, focus groups, and interviews to gauge consumer sentiment. By contrast, emotion-aware AR provides real-time, unobtrusive insights into how users react to products or experiences, complementing approaches like AI vs Human in Market Research. This offers brands actionable intelligence with higher accuracy and scalability.

Technical Challenges and Ethical Considerations

Despite significant progress, integrating Emotion Recognition AI in AR presents several challenges:

-

Data Privacy: Emotional data is deeply personal. AR systems must implement strict security protocols to protect user information, anonymize sensitive data, and ensure compliance with privacy regulations.

-

Model Bias: Emotion recognition algorithms trained on limited demographic datasets can misinterpret emotional cues in underrepresented groups. Continuous model auditing and inclusive training are essential to prevent inaccurate or unfair assessments.

-

User Comfort and Consent: Users must be fully aware of emotion tracking mechanisms. Transparent consent and clear communication of how data is used are critical to building trust and ensuring ethical deployment.

-

Hardware Limitations: Advanced emotion detection requires high-quality sensors. Ensuring accessibility across a range of AR devices is key to reducing AR Inequality and democratizing emotion-aware AR experiences.

Deep Personalization Through Emotion-Aware AR Systems

One of the most powerful outcomes of integrating Emotion Recognition AI into augmented reality is the emergence of deeply personalized digital environments. Traditional AR systems respond to gestures and commands, but emotion-aware systems interpret the user’s psychological state as an additional layer of input. This transforms AR from a reactive interface into an adaptive ecosystem that evolves with the user.

Emotion Recognition AI enables AR platforms to interpret emotional signals as behavioral data. When a user expresses excitement, hesitation, or frustration, the system can recalibrate the experience instantly. For example, in immersive learning simulations, Emotion Recognition AI can detect early signs of confusion and automatically simplify instructions. Conversely, when a user demonstrates confidence and engagement, the system can increase complexity to maintain cognitive stimulation.

This personalization is not just about convenience. It reflects a shift toward emotionally intelligent computing. Emotion Recognition AI allows AR systems to simulate empathy by responding in ways that align with human emotional expectations. Users subconsciously perceive these systems as more intuitive and supportive, which increases trust and long-term engagement.

Behavioral Psychology Behind Emotion-Adaptive Interfaces

To understand why Emotion Recognition AI is effective in AR, it is important to examine the behavioral psychology behind emotional interaction. Human decision-making is heavily influenced by emotion. Cognitive science shows that emotional responses guide attention, memory formation, and risk assessment. When AR experiences adapt to emotional feedback, they align with natural human cognitive processes.

Emotion Recognition AI helps AR platforms reduce cognitive friction. When users feel overwhelmed, adaptive pacing prevents mental fatigue. When users feel bored, increased stimulation restores engagement. This emotional balancing act mirrors how skilled educators and communicators adjust their approach in real time.

From a psychological standpoint, emotionally adaptive AR environments activate reward mechanisms in the brain. Positive emotional reinforcement encourages exploration and experimentation. Emotion Recognition AI identifies these moments and amplifies them through visual cues, interactive feedback, or narrative progression. As a result, users experience a sense of flow, a mental state associated with peak performance and satisfaction.

System Architecture for Emotion-Driven AR Platforms

Building scalable emotion-aware AR systems requires a layered architecture that integrates sensing, processing, and response mechanisms. At the sensing layer, cameras and biometric sensors capture raw emotional indicators. Emotion Recognition AI processes this data using neural networks trained on multimodal datasets that include facial expressions, voice signals, and behavioral patterns.

The interpretation layer translates emotional signals into actionable insights. Here, Emotion Recognition AI categorizes emotional states and predicts user intent. These predictions feed into the experience management layer, which controls AR content delivery. Visual overlays, sound design, and interaction mechanics are dynamically adjusted to match emotional context.

A simplified architecture model looks like this:

| Layer | Function | Role of Emotion Recognition AI |

|---|---|---|

| Sensing Layer | Capture visual/audio/biometric data | Extract emotional signals |

| Processing Layer | Analyze patterns with AI models | Classify emotional states |

| Interpretation Layer | Predict behavioral intent | Map emotion to action |

| Experience Layer | Adapt AR content in real time | Personalize interaction |

This modular design allows developers to upgrade Emotion Recognition AI models without redesigning the entire AR platform. It also supports distributed computing, where time-sensitive processing occurs on-device while complex analytics run in the cloud.

Enhancing Immersion Through Emotional Feedback Loops

Immersion is the defining feature of successful AR experiences. Emotion Recognition AI strengthens immersion by creating continuous emotional feedback loops. In these loops, user emotions influence system behavior, and system behavior in turn shapes user emotions.

For example, in interactive storytelling, Emotion Recognition AI can detect suspense or excitement and adjust pacing accordingly. Music intensity, lighting effects, and character interactions respond to emotional cues, creating a narrative that feels alive. Users are no longer passive observers; they become emotional participants in a co-created experience.

This feedback mechanism is especially effective in training simulations. Emergency response drills, medical practice scenarios, and high-risk industrial training benefit from emotion-sensitive adaptation. Emotion Recognition AI identifies stress thresholds and gradually increases exposure to build resilience. Over time, users develop emotional regulation skills alongside technical competence.

Human Machine Trust and Emotional Transparency

Trust is a central factor in the adoption of intelligent systems. Emotion Recognition AI influences trust by shaping how users perceive machine intent. When AR systems respond appropriately to emotional cues, users interpret those responses as signs of awareness and reliability.

However, trust requires transparency. Users must understand when and how their emotions are being analyzed. Ethical interface design includes visible indicators that Emotion Recognition AI is active and clear explanations of how emotional data influences system behavior. Transparent communication reduces uncertainty and encourages informed consent.

Emotionally transparent AR systems also support user agency. Instead of passively collecting emotional data, they allow users to control sensitivity levels or disable emotion tracking entirely. This flexibility reinforces autonomy while maintaining the benefits of personalization.

Performance Optimization and Real-Time Processing

Real-time responsiveness is essential for emotion-aware AR. Even slight delays can disrupt immersion. Emotion Recognition AI must operate with high efficiency, balancing computational intensity with energy consumption.

Edge AI acceleration plays a critical role here. Specialized processors execute Emotion Recognition AI models directly on AR devices, minimizing latency. Compression techniques such as model pruning and quantization reduce resource requirements without sacrificing accuracy.

Developers also employ predictive caching strategies. By anticipating likely emotional transitions, Emotion Recognition AI prepares adaptive responses in advance. This proactive approach ensures seamless interaction even in complex environments.

Designing Emotion-Centered User Interfaces

Traditional interface design prioritizes usability and accessibility. Emotion-centered design extends these principles by incorporating affective feedback. Emotion Recognition AI informs interface elements such as color palettes, animation speed, and spatial arrangement.

Warm color tones may appear when the system detects positive engagement, while calming visual themes activate during stress. Micro-interactions, subtle animations that respond to emotional state, create a sense of responsiveness. Emotion Recognition AI ensures these adjustments remain subtle enough to avoid distraction while still enhancing emotional resonance.

Designers must strike a balance between responsiveness and predictability. Overly dramatic emotional adaptation can feel intrusive. Effective emotion-centered design uses gradual transitions that respect user expectations and maintain consistency.

Scaling Emotion Recognition Across Platforms

As AR ecosystems expand, cross-platform compatibility becomes increasingly important. Emotion Recognition AI frameworks are evolving toward standardized protocols that allow emotional data to be interpreted consistently across devices.

Cloud-based emotion analytics enable shared learning across platforms. Aggregated, anonymized emotional datasets improve model accuracy while preserving privacy. Emotion Recognition AI systems learn from diverse user interactions, refining their ability to interpret subtle emotional nuances.

Scalability also involves cultural adaptability. Emotional expression varies across societies. Emotion Recognition AI must account for cultural context to avoid misinterpretation. Localization strategies include region-specific training datasets and adaptive calibration mechanisms.

Long-Term Impact on Human Technology Interaction

The integration of Emotion Recognition AI into AR signals a broader transformation in human–technology relationships. Interfaces are evolving from tools into collaborative partners that respond to emotional context.

Over time, emotion-aware AR may influence communication norms. As users grow accustomed to emotionally responsive systems, expectations for empathy in digital environments will rise. Emotion Recognition AI will likely shape how future generations perceive interaction with machines, blending emotional intelligence with computational precision.

This evolution raises important philosophical questions about authenticity and agency. While Emotion Recognition AI simulates emotional understanding, it does not experience emotion. Designers and researchers must carefully navigate the boundary between supportive adaptation and artificial emotional mimicry.

Conclusion

Emotion Recognition AI is transforming augmented reality by making digital interactions more adaptive, personalized, and human-centered. By understanding emotional signals, AR systems can respond intelligently, improving engagement, learning, and collaboration. As long as privacy and ethical standards are maintained, Emotion Recognition AI will continue to shape smarter, more natural AR experiences that align closely with real human behavior.