Remember when augmented reality was just floating objects with no awareness of their surroundings?

Those days are disappearing fast.

Today’s AR experiences understand the world around you, thanks to powerful machine learning algorithms that map environments in real-time.

The Foundation of Spatial Understanding

Environment mapping serves as the backbone of truly immersive augmented reality.

Without accurate spatial understanding, digital objects can’t interact convincingly with the real world.

Traditional mapping techniques required extensive processing power and often failed in complex environments.

Machine learning has changed this equation dramatically.

Modern algorithms can now interpret spatial data instantly, allowing AR applications to create accurate environmental models on consumer devices.

This breakthrough enables digital content to interact naturally with physical spaces.

Key ML Approaches Transforming AR Mapping

Several machine learning approaches have proven particularly valuable for real-time environment mapping.

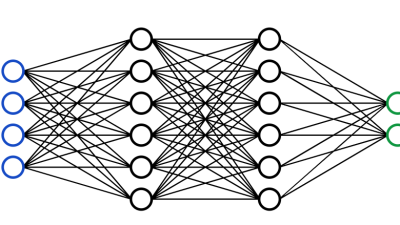

Convolutional Neural Networks (CNNs) excel at extracting visual features from camera inputs. These networks can identify walls, floors, furniture, and other structural elements almost instantly.

Simultaneous Localization and Mapping (SLAM) algorithms enhanced by machine learning can track device position while building environmental maps. This dual capability is crucial for stable AR experiences.

Depth estimation networks generate accurate distance measurements from standard camera images. This allows even single-camera devices to create 3D environmental models.

These technologies work together to create a comprehensive understanding of physical spaces.

Learn more about implementing these technologies in your business at our homepage.

Real-Time Processing Breakthroughs

The most impressive aspect of modern mapping algorithms is their speed.

Early environment mapping systems required seconds or even minutes to process a space.

Today’s machine learning models can analyze and map environments at 30-60 frames per second.

This real-time capability makes AR feel responsive and natural.

Edge computing optimizations allow complex algorithms to run efficiently on mobile devices, eliminating the need for cloud processing in many applications.

The result is AR that responds instantly to changes in your environment.

Overcoming Traditional Mapping Challenges

Machine learning algorithms have solved persistent problems that plagued earlier mapping technologies.

Lighting variations no longer derail environment recognition. Trained neural networks can compensate for shadows, glare, and low-light conditions.

Reflective and transparent surfaces, once impossible to map accurately, can now be identified and handled appropriately.

Dynamic environments with moving objects can be tracked and updated continuously in the spatial model.

These improvements drastically expand the environments where AR can function effectively.

Environmental Understanding Beyond Geometry

Modern mapping algorithms don’t just capture the shape of spaces—they understand them.

Semantic segmentation networks can identify different types of surfaces and objects. This allows AR content to interact differently with walls versus furniture.

Material recognition can distinguish between wood, metal, glass, and other substances, enabling realistic physics interactions.

Context awareness helps AR systems understand the function of different spaces, whether you’re in a kitchen, office, or outdoor environment.

This deeper understanding enables more meaningful AR interactions.

Practical Applications Emerging Today

These mapping technologies are creating entirely new possibilities for augmented reality applications.

Interior design platforms can suggest furniture that perfectly fits your space, accounting for existing pieces and room dimensions.

Navigation systems can guide users through complex buildings with visual overlays that understand doorways, stairs, and obstacles.

Remote collaboration tools allow multiple users to share a mapped environment, with each participant seeing the same spatial model.

Gaming experiences can incorporate real-world elements as part of gameplay, with virtual characters navigating around your actual furniture.

Industrial maintenance applications can identify machinery parts and overlay repair instructions directly on the equipment.

Technical Implementation Considerations

Implementing these advanced mapping algorithms requires careful planning.

Mobile device constraints remain a significant consideration. Battery life and thermal management must be balanced against mapping accuracy.

Sensor fusion combines data from cameras, accelerometers, gyroscopes, and sometimes LiDAR to improve mapping quality.

Privacy concerns must be addressed when capturing and potentially storing spatial data from user environments.

Cross-platform compatibility ensures consistent mapping quality across different devices and operating systems.

The Future Path of ML-Driven Environment Mapping

The evolution of this technology shows no signs of slowing down.

Cooperative mapping will allow multiple devices to contribute to a shared environmental model, improving accuracy and coverage.

Long-term spatial memory will enable AR systems to remember environments between sessions, eliminating repeated mapping needs.

Predictive modeling will anticipate changes in dynamic environments, creating more stable AR experiences.

These advancements will make AR increasingly indistinguishable from natural vision.

Conclusion

Machine learning algorithms have transformed environment mapping from a technical limitation into a powerful enabler of immersive AR experiences.

Real-time processing capabilities now allow AR applications to understand and respond to physical spaces instantly.

As these technologies continue to mature, we’ll see augmented reality that integrates more naturally and usefully into our everyday environments.

The future of AR isn’t just about placing digital objects in the world—it’s about creating experiences that truly understand the spaces we inhabit.

That understanding begins with advanced machine learning algorithms and their remarkable ability to map our world in real-time.