AI Adaptive AR UX transforms user experiences by combining AI, reinforcement learning, and contextual intelligence. By personalizing interactions, addressing AR inequality, and integrating emotion recognition, it delivers smarter, immersive, and inclusive AR interfaces across education, retail, healthcare, and entertainment.

Introduction to AI Adaptive AR UX

The rapid evolution of technology has reshaped the way we interact with digital environments, and AI Adaptive AR UX is at the forefront of this transformation. By combining artificial intelligence with augmented reality, designers and developers can create interfaces that adjust in real-time to user behavior, context, and preferences. Unlike static AR experiences, these adaptive interfaces learn from interactions, offering a seamless, personalized experience that feels intuitive and natural.

In modern AR applications, personalization is no longer optional. Users expect experiences that respond intelligently to their needs, and AI Adaptive AR UX ensures this by leveraging advanced algorithms that can predict user intent and adjust interface elements dynamically. From immersive gaming to educational tools, adaptive AR interfaces are redefining engagement and usability.

How AI Adaptive AR UX Works

At the core of AI Adaptive AR UX is the concept of continuous learning. Machine learning models analyze user interactions and environmental cues to modify AR elements in real-time. For example, if a user frequently focuses on a particular menu or control, the system can prioritize that element, making navigation smoother. Reinforcement learning techniques allow the system to reward successful interactions, gradually improving interface efficiency and user satisfaction.

Additionally, AI for Contextual AR enhances the experience by understanding the user’s environment. Contextual awareness allows the system to adjust visual overlays, notifications, and interactive elements based on location, activity, or surrounding objects. This capability ensures that AR experiences are not only personalized but also contextually relevant, making them far more effective and engaging.

Benefits of Adaptive AR Interfaces

The adoption of AI Adaptive AR UX offers numerous benefits across industries. In education, adaptive AR interfaces can present learning materials tailored to a student’s pace and comprehension, enhancing retention and engagement. In retail, personalized AR shopping experiences can recommend products based on past interactions, browsing habits, and even emotional responses. Here, Emotion Recognition AI plays a pivotal role by subtly analyzing user expressions to adjust content delivery in real-time.

In addition, adaptive AR can help bridge the gap caused by AR Inequality, ensuring that users with different hardware capabilities or accessibility needs receive optimized experiences. By learning and adjusting dynamically, AI-driven interfaces reduce barriers and enhance inclusivity in AR applications.

Role of Federated Learning in AR

One of the challenges in developing intelligent AR interfaces is maintaining user privacy while collecting behavioral data. Federated Learning in AR addresses this by training AI models directly on users’ devices rather than central servers. This approach allows systems to learn from vast amounts of interaction data without compromising privacy. The result is a more secure, scalable, and adaptive AR UX that respects user confidentiality while improving performance over time.

By integrating federated learning, AI Adaptive AR UX systems can refine personalization strategies, improve predictive accuracy, and ensure that the interface evolves alongside user behavior. This is especially important in enterprise applications where sensitive data must be protected but optimal usability is still required.

AI vs Human in Market Research and AR UX

The development of adaptive AR interfaces also benefits from insights derived from market research. Comparing AI vs Human in Market Research reveals that AI can process vast datasets, identify patterns, and predict preferences far faster than humans. These insights feed directly into interface design, allowing AI Adaptive AR UX to anticipate user needs and streamline interactions.

While human researchers excel at understanding nuanced emotions and cultural context, AI can augment their efforts by continuously monitoring usage patterns and making rapid adjustments. Combining both approaches ensures that adaptive AR interfaces are grounded in real user behavior and informed by large-scale data analysis.

Practical Applications of AI Adaptive AR UX

The potential of AI Adaptive AR UX extends across a wide range of industries, from entertainment and education to healthcare and retail. By integrating AI-driven adaptivity into AR interfaces, organizations can provide experiences that are not only immersive but also intelligently responsive to user behavior.

In the healthcare sector, AI Adaptive AR UX is being used to assist surgeons during complex procedures. By tracking gaze patterns and hand movements, adaptive interfaces can highlight critical anatomical structures or suggest next steps, ensuring precision and reducing cognitive load. This creates an environment where the technology seamlessly supports human expertise rather than replacing it.

In retail, adaptive AR interfaces are revolutionizing the shopping experience. Users can virtually try products in real-time, with the system dynamically adjusting recommendations based on past interactions. Coupled with AI Conversational Commerce, these AR platforms can engage users in personalized dialogues, guiding them through product options and enhancing purchase confidence.

Technical Framework Behind Adaptive AR Interfaces

Implementing AI Adaptive AR UX requires a combination of machine learning techniques, sensor integration, and real-time analytics. Reinforcement learning is often employed to optimize interface elements based on user interactions. For instance, if a user consistently navigates through certain menus faster than others, the system learns to prioritize those elements, streamlining future interactions.

Contextual awareness plays a crucial role in these adaptive systems. By leveraging AI for Contextual AR, the interface can interpret environmental factors such as lighting, spatial layout, and user movement. This ensures that virtual elements are placed accurately and remain relevant, creating a seamless blend between digital and physical worlds.

Addressing AR Inequality Through Adaptive UX

Despite the growth of AR, AR Inequality remains a challenge. Users with less advanced hardware or limited connectivity often experience reduced functionality or slower response times. AI Adaptive AR UX mitigates this by dynamically adjusting visual fidelity, interaction complexity, and processing load based on device capabilities.

For example, a user on a high-end device may experience highly detailed graphics and complex interaction options, while a user on a mid-range device receives a simplified yet fully functional interface. This ensures inclusivity without compromising user experience, allowing a broader audience to benefit from adaptive AR technologies.

Emotion Recognition AI in Adaptive Interfaces

Integrating Emotion Recognition AI further enhances AI Adaptive AR UX by allowing interfaces to respond to users’ emotional states. For instance, if a user shows signs of frustration while navigating an AR tutorial, the system can offer hints, slow down instructions, or simplify the interface to reduce stress. Conversely, positive emotional cues can trigger more advanced content or interactive challenges, keeping the experience engaging and satisfying.

Emotion-sensitive interfaces are particularly valuable in educational and gaming contexts, where maintaining engagement is critical. By combining adaptive mechanics with emotional intelligence, AI Adaptive AR UX delivers experiences that feel personalized on both a functional and emotional level.

Security and Privacy Considerations

As adaptive AR interfaces collect extensive user data to improve personalization, privacy and security are critical concerns. Federated learning helps balance these needs by keeping sensitive data on-device while still contributing to model improvements. This ensures that AI Adaptive AR UX benefits from large-scale learning without exposing personal information.

Moreover, security protocols must protect interactions, particularly when AR applications integrate commerce or financial data. By adopting robust encryption and anonymization methods, developers can maintain user trust while enabling advanced adaptive features.

Case Studies of AI Adaptive AR UX

Several real-world implementations demonstrate how AI Adaptive AR UX is transforming user experiences. In industrial training, for example, AR headsets equipped with adaptive interfaces adjust instructions based on worker proficiency. If a trainee repeatedly struggles with a procedure, the system dynamically slows down instructions, highlights critical steps, and even offers interactive prompts to guide them. Over time, these adaptive experiences enhance skill acquisition while reducing error rates.

Retail environments also benefit from AI Adaptive AR UX. Major brands have integrated AR mirrors that use contextual data to adjust clothing recommendations in real-time. Coupled with AI Conversational Commerce, customers can receive instant suggestions or ask questions through natural language interfaces. The combination of adaptivity and conversational intelligence results in more satisfying, personalized shopping experiences.

In research contexts, AI vs Human in Market Research has shown that adaptive AR interfaces powered by AI can analyze behavioral data at scale more efficiently than human analysts alone. These systems can identify patterns, predict preferences, and optimize interface layouts to enhance engagement, demonstrating the potential of AI to complement human expertise.

Designing Effective AI Adaptive AR UX

Creating effective AI Adaptive AR UX requires careful attention to human psychology, cognitive load, and interaction design. Developers must balance automation with user control, ensuring the system adapts without creating confusion or frustration. Reinforcement learning models can support this by continuously evaluating user interactions and updating interface behaviors based on success rates.

Contextual intelligence is also critical. With AI for Contextual AR, interfaces can tailor their responses not only to individual user behavior but also to environmental factors such as location, lighting, and available space. This ensures that virtual elements remain legible, relevant, and useful across diverse situations.

Integration of Federated Learning in Practice

The integration of Federated Learning in AR is essential for maintaining privacy while enabling adaptive capabilities. For instance, AR education platforms can refine learning modules based on collective data from thousands of devices without transferring sensitive information to central servers. This distributed approach protects user data while allowing AI models to improve continually, enhancing the effectiveness of AI Adaptive AR UX across applications.

Emotional Intelligence in AR Interfaces

Adaptive AR interfaces that incorporate Emotion Recognition AI can respond to subtle cues, creating experiences that feel intuitive and empathetic. For instance, an educational AR app can detect confusion or disengagement in students and adjust the difficulty or presentation style accordingly. Similarly, gaming experiences can use emotional feedback to adjust challenges, rewards, and narrative pacing, making interactions more immersive.

By integrating emotional intelligence, AI Adaptive AR UX moves beyond static interfaces, creating environments that actively respond to the user’s cognitive and emotional state. This enhances engagement, satisfaction, and long-term adoption of AR technologies.

Overcoming AR Inequality

Despite advancements, AR Inequality remains a significant concern. Not all users have access to high-end devices or high-speed connectivity, which can limit the effectiveness of AR experiences. AI Adaptive AR UX addresses this by adjusting content fidelity, interaction complexity, and processing requirements based on available hardware.

For example, users on entry-level devices can experience simplified AR elements that retain essential functionality, while users on premium hardware receive richer, more interactive experiences. This dynamic scaling ensures inclusivity and democratizes access to adaptive AR technologies.

Future Trends in AI Adaptive AR UX

Looking ahead, AI Adaptive AR UX will continue to evolve with advances in AI, machine learning, and AR hardware. The combination of reinforcement learning, federated learning, contextual AI, and emotion recognition will enable interfaces that are increasingly predictive, empathetic, and personalized.

Industries like healthcare, education, retail, and entertainment will see AR experiences that dynamically adapt to each user’s preferences, context, and emotional state. As adoption grows, addressing AR Inequality and integrating privacy-preserving technologies will remain critical to ensuring that these benefits are accessible to all users.

Technical Architecture of AI Adaptive AR UX

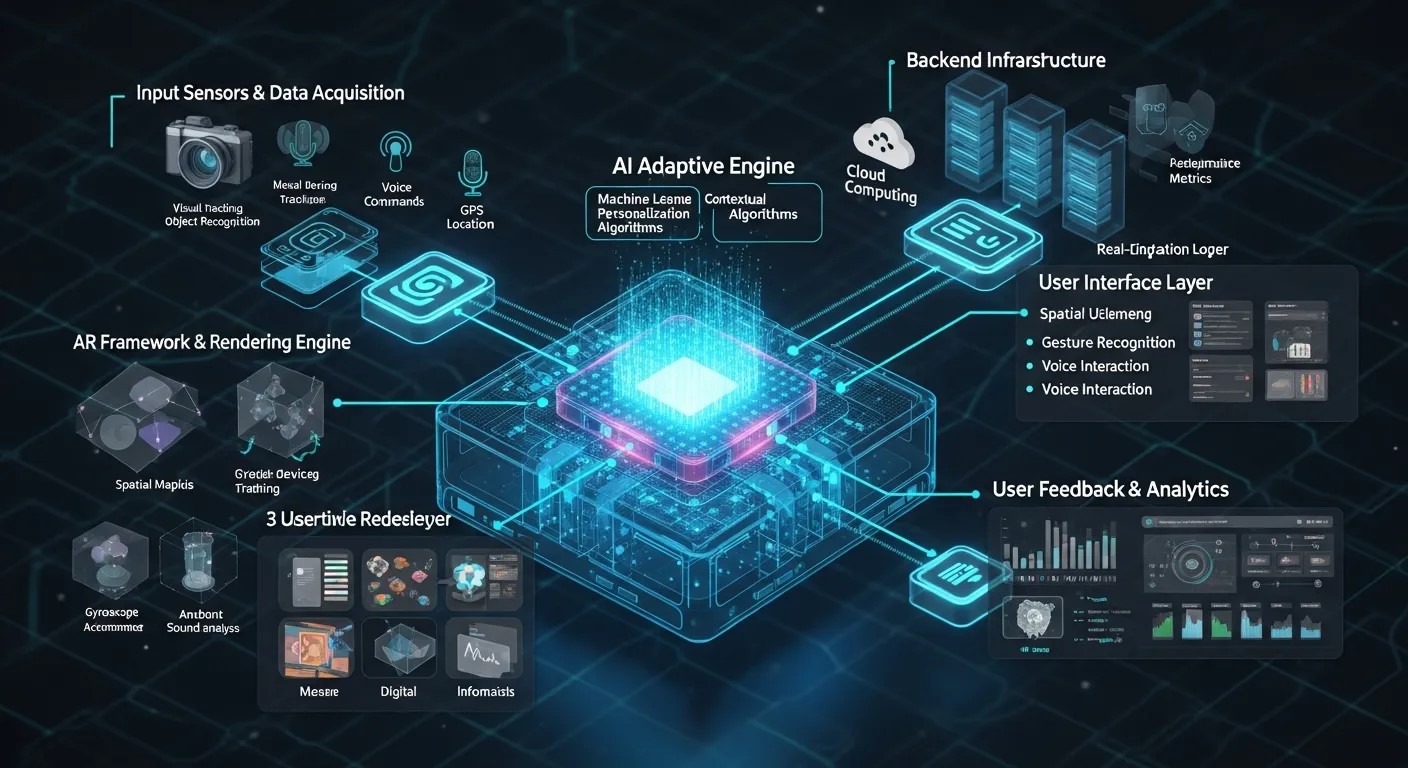

Building AI Adaptive AR UX involves integrating multiple layers of AI, AR, and user interface technologies. At the foundational level, sensor inputs from devices like cameras, motion trackers, and depth sensors provide real-time data on user movements, gestures, and environmental conditions. These inputs feed into AI models that process and interpret the information to guide adaptive interface behaviors.

Machine learning algorithms, particularly reinforcement learning, form the core of decision-making. These models evaluate the success of interface adaptations, reward effective interactions, and continuously improve predictions. This iterative learning ensures that AI Adaptive AR UX evolves alongside user behavior, delivering increasingly intuitive experiences.

AI Models Behind Adaptive Interfaces

Several AI models support adaptive AR experiences. Federated Learning in AR allows distributed learning across multiple devices without compromising user privacy. By keeping sensitive data on-device, federated learning contributes to more robust and scalable AI Adaptive AR UX systems while maintaining compliance with privacy regulations.

Additionally, AI for Contextual AR integrates situational awareness, enabling interfaces to adjust dynamically based on environmental factors. For example, lighting conditions, spatial layout, or even ambient noise can influence how AR elements are displayed, ensuring clarity and relevance at all times.

Emotion-sensitive models, powered by Emotion Recognition AI, enable interfaces to detect subtle cues in facial expressions, voice, or gestures. This emotional feedback allows adaptive AR systems to respond appropriately—modifying instructions, offering hints, or adjusting interaction intensity—to maintain engagement and user satisfaction.

Optimizing User Engagement

Optimizing engagement is a critical goal of AI Adaptive AR UX. By analyzing patterns in user behavior, adaptive systems can identify friction points, anticipate needs, and streamline interactions. For example, if a user struggles with a menu interface repeatedly, the system can reorder options or provide contextual guidance.

Combining adaptive functionality with AI Conversational Commerce also enhances engagement in commercial applications. Virtual assistants embedded in AR interfaces can answer questions, suggest products, or guide decision-making, all in real-time, creating seamless and persuasive interactions.

Balancing AI and Human Insight

While AI can analyze vast datasets and predict user behavior efficiently, human input remains invaluable. Comparing AI vs Human in Market Research highlights the complementary roles of AI and human expertise. Humans can provide nuanced understanding, cultural context, and ethical guidance, whereas AI scales insights and continuously adapts interfaces based on real-time data. Integrating both approaches ensures that AI Adaptive AR UX is not only efficient but also aligned with user expectations and psychological principles.

Performance Metrics and Evaluation

Measuring the effectiveness of AI Adaptive AR UX requires specific metrics. Commonly used indicators include task completion time, error rate, user engagement levels, emotional response, and satisfaction scores. By combining quantitative and qualitative analysis, developers can refine algorithms and interface designs.

Adaptive systems also benefit from A/B testing, where variations of AR interactions are evaluated to determine which designs maximize usability and engagement. This iterative process, guided by reinforcement learning, ensures continuous improvement while maintaining a personalized experience for every user.

Security and Ethical Considerations

The widespread adoption of AI Adaptive AR UX brings ethical and security challenges. Maintaining user privacy, preventing data misuse, and ensuring fairness are critical. By leveraging Federated Learning in AR and anonymized analytics, adaptive AR systems can learn from user behavior without compromising sensitive data.

Moreover, addressing AR Inequality ethically means designing interfaces that adjust to different hardware capabilities, ensuring that all users regardless of device quality or connectivity can access adaptive AR features without degradation of the experience.

Conclusion

AI Adaptive AR UX represents a paradigm shift in how users interact with augmented reality. By seamlessly combining reinforcement learning, contextual intelligence, emotion recognition, and privacy-preserving techniques like federated learning, adaptive AR interfaces provide personalized, immersive, and inclusive experiences. As technology evolves, these intelligent systems will continue to enhance engagement, bridge accessibility gaps, and transform industries—from education and healthcare to retail and entertainment demonstrating that the future of AR is not just visual but adaptive, intuitive, and human-centered.

Frequently Asked Questions (FAQ)

What is AI Adaptive AR UX?

AI Adaptive AR UX refers to augmented reality interfaces that use artificial intelligence to dynamically adjust content, layout, and interactions based on user behavior, context, and emotional responses, providing a personalized and intuitive experience.

How does reinforcement learning enhance AR interfaces?

Reinforcement learning enables AR systems to learn from user interactions. By rewarding successful actions and adapting interface elements over time, it improves usability, efficiency, and overall user satisfaction in AI Adaptive AR UX.

What role does emotion recognition AI play in adaptive AR?

Emotion Recognition AI allows AR interfaces to detect user emotions, such as frustration or engagement, and adapt content or guidance accordingly. This makes interactions more intuitive, immersive, and responsive to individual needs.

How does federated learning benefit AR UX?

Federated Learning in AR allows AI models to train on users’ devices without sending personal data to central servers. This enhances privacy while still enabling adaptive learning, improving performance, and maintaining secure AI Adaptive AR UX.

Can AI Adaptive AR UX reduce AR inequality?

Yes. Adaptive interfaces dynamically adjust features, visuals, and interactions based on hardware capabilities, connectivity, or accessibility needs, ensuring that a wider audience experiences functional and engaging AR regardless of device limitations.

What industries benefit most from AI Adaptive AR UX?

Education, healthcare, retail, entertainment, and industrial training are key beneficiaries. Personalized interfaces, contextual intelligence, and real-time adaptivity enhance engagement, efficiency, and satisfaction across these sectors.

How does AI compare to humans in designing adaptive AR UX?

While humans excel in nuanced understanding and ethical considerations, AI can process large datasets, detect patterns, and predict user behavior in real-time. Combining both approaches ensures highly effective AI Adaptive AR UX solutions.