Augmented reality has evolved far beyond simple visual overlays. Today’s AR experiences deliver information that genuinely responds to our environment and needs.

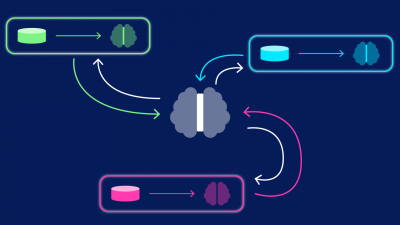

Behind this evolution lie transformer models – sophisticated neural networks originally developed for language processing but now transforming how AR systems understand context.

These powerful algorithms analyze multiple inputs simultaneously, drawing connections between what you’re seeing, what you’ve previously shown interest in, and what might be most relevant to your current situation.

The result? AR experiences that feel less like accessing technology and more like having an intuitive understanding of your surroundings.

How Transformer Models Work in AR Environments

Transformer models excel at processing relationships between different elements. Unlike earlier algorithms that analyzed data sequentially, transformers use a mechanism called “attention” to examine all parts of the input simultaneously.

In AR applications, this means the system can consider multiple contextual factors at once: visual elements in view, user location, time of day, previous interactions, and even subtle cues like gaze direction or walking pace.

This comprehensive analysis happens in milliseconds, creating responsive experiences that feel natural rather than computational.

The technical architecture allows transformers to recognize patterns across different types of data, making them perfectly suited for the multi-modal nature of augmented reality.

Beyond Simple Recognition to Deep Understanding

Earlier AR systems operated through basic recognition – identifying a landmark or object and retrieving pre-written information.

Transformer-powered systems achieve something closer to understanding. They can recognize that you’re looking at a restaurant during lunchtime, recall your dietary preferences, and proactively offer menu recommendations that accommodate your restrictions.

This contextual awareness creates experiences that anticipate needs rather than simply responding to direct requests.

As noted by industry experts at AR Marketing Tips, this shift from reactive to predictive information delivery represents one of the most significant advancements in the field.

Personalization Through Behavioral Patterns

Transformer models excel at identifying patterns across time and interactions. In AR applications, this translates to deeply personalized experiences.

The system notices which types of information you engage with most frequently. It observes which presentation styles lead to positive responses from you. It identifies patterns in how you use AR in different environments.

These insights accumulate to create an experience tailored specifically to your preferences, often without requiring explicit customization settings.

This implicit personalization feels natural rather than intrusive – information simply begins appearing in ways that align with your established patterns.

Contextual Language Understanding in AR

Natural language plays an increasingly important role in AR interactions. Transformer models, originally designed for language processing, bring sophisticated linguistic understanding to these experiences.

When you ask a question about something in view, transformer-powered AR understands not just the literal words but the context behind them.

A question like “How much is that?” becomes meaningful because the system understands what “that” refers to based on where you’re looking or pointing.

This natural interaction removes the friction of having to be overly precise with commands or questions.

Real-Time Adaptation to Changing Environments

Traditional AR information systems struggled with dynamic environments. Pre-programmed responses couldn’t adapt to unexpected situations.

Transformer models excel precisely in these fluid scenarios. They continuously reassess context as conditions change around you.

If you’re using AR navigation and encounter road construction, the system doesn’t just recalculate your route – it might emphasize different landmarks based on your new path or adjust instructions knowing you’re now in an unfamiliar area.

This continuous adaptation creates resilient AR experiences that remain helpful even when circumstances change unexpectedly.

Multimodal Integration for Richer Context

Transformer architectures have evolved to process multiple types of data simultaneously – text, images, audio, and more. This multimodal capability perfectly matches AR’s diverse information streams.

Modern systems integrate what your camera sees with the sounds around you, your location data, time of day, and even biometric signals like walking pace or gaze patterns.

A museum AR guide might notice you lingering on certain types of artwork, hear you asking questions about specific historical periods, and dynamically adjust its information presentation accordingly.

This integrated approach creates experiences that seem to understand you on a deeper level.

Overcoming Technical Implementation Challenges

Despite their power, implementing transformer models in AR contexts presents substantial challenges. The computational demands can strain mobile devices, and latency must be minimized for seamless experiences.

Developers have responded with innovative approaches, including edge computing that processes data on-device rather than sending everything to cloud servers.

Model compression techniques reduce size while maintaining performance. Hybrid approaches use lightweight models for immediate responses while more complex processing happens in the background.

These technical solutions make transformer-powered AR practical even on consumer devices.

Privacy Considerations in Contextual AR

The contextual awareness that makes transformer-powered AR so compelling also raises important privacy questions. Understanding user behavior requires collecting and analyzing personal data.

Responsible implementations use techniques like federated learning, where models improve without raw data leaving your device.

Differential privacy adds mathematical noise to data, preserving personal privacy while maintaining statistical usefulness.

These approaches allow for personalized experiences without compromising user privacy – an essential balance for widespread adoption.

Industry Applications Transforming Businesses

Across industries, transformer-powered contextual AR is creating new possibilities for information delivery.

Manufacturing environments use these systems to provide workers with exactly the information needed for specific tasks, adjusting detail level based on worker experience.

Retail applications recognize not just products but shopping patterns, offering information that matches your decision-making style.

Healthcare settings implement AR systems that understand medical contexts, providing relevant patient information precisely when needed.

These specialized applications demonstrate how transformers are making AR information delivery more valuable across domains.

The Learning Curve: Improving Through Interaction

One of the most powerful aspects of transformer models is their ability to improve through continued interaction. Each user engagement provides data that refines the system’s understanding.

This learning happens on both personal and aggregate levels. Your individual AR assistant becomes more attuned to your specific preferences, while the underlying models improve for everyone through anonymized pattern recognition.

This compounding improvement creates systems that become more helpful over time rather than growing outdated.

The best implementations make this learning process transparent, helping users understand how their interactions shape future experiences.

Designing for Contextual Information Flow

Creating effective AR experiences with transformer models requires rethinking information design principles. Traditional approaches that present all information at once become overwhelming.

Modern designs focus on progressive disclosure – revealing information in layers as attention and context indicate interest.

Visual design must accommodate varying information density based on situational needs. Interfaces must balance predictive helpfulness with user control.

These design challenges require collaboration between AI specialists and user experience professionals to create truly useful contextual information systems.

The Future: Ambient Intelligence Through AR

The trajectory of transformer-powered AR points toward what researchers call “ambient intelligence” – helpful information that appears precisely when needed without disrupting your natural flow of activity.

Future systems will become increasingly subtle in their information delivery, perhaps using directional audio or peripheral visual cues rather than center-screen notifications.

The ultimate goal is augmentation that enhances human capability without demanding attention – information that feels like an extension of your own awareness rather than an external system.

Transformer models represent a crucial step toward this seamless integration of digital intelligence into everyday experience.

Conclusion

Transformer models are fundamentally changing what’s possible in contextual AR information delivery. By enabling systems that truly understand the relationship between different elements of context, they’re creating experiences that feel intuitive and helpful rather than mechanical.

As these technologies continue to mature, we’ll see AR experiences that adapt more fluidly to our needs, present information more naturally, and integrate more seamlessly into our daily activities.

The most successful implementations will be those that balance the remarkable capabilities of transformer models with thoughtful design and respect for user agency and privacy.

The future of AR isn’t just about overlaying digital information on the physical world – it’s about delivering exactly the right information at exactly the right moment, in exactly the right way. Transformer models are making this vision increasingly achievable.