AR Mechanics explains how augmented reality seamlessly overlays digital content onto the real world. From sensors and rendering engines to AI integration, AR enhances experiences across industries, education, and entertainment while enabling immersive, interactive, and human-centered applications.

Understanding AR Mechanics: The Foundation of Augmented Reality

Augmented reality has rapidly evolved from a futuristic concept to a transformative technology shaping industries worldwide. At its core, AR Mechanics involves the seamless overlay of digital content onto the real world, allowing users to interact with virtual objects in real-time. Unlike traditional digital interfaces, AR relies on complex systems that detect, map, and respond to environmental data to create an immersive experience.

The basic structure of AR Mechanics combines hardware, software, and advanced algorithms. Hardware components include sensors, cameras, and displays that capture and project information. Software algorithms process these inputs to determine object placement, motion tracking, and contextual relevance. Together, these elements enable AR devices to provide dynamic experiences that adapt to user movements and surroundings.

One key aspect in understanding AR Mechanics is differentiating it from similar technologies. Many people often compare AR with virtual reality, but AR vs VR presents significant contrasts. While VR fully immerses users in a digital environment, AR enhances the real world with additional layers of information. This distinction is critical for businesses and developers who seek to leverage augmented experiences for training, marketing, or operational efficiency.

The Role of Sensors and Spatial Awareness

Sensors form the backbone of AR Mechanics, providing the system with the necessary data to interpret the real world. Modern AR devices use a combination of cameras, accelerometers, gyroscopes, and depth sensors to understand spatial environments. These sensors feed information to the processing unit, which calculates the appropriate placement of digital elements. Without accurate spatial awareness, virtual objects may appear misaligned or fail to respond to user movements effectively.

Advancements in AR Hologram Technology have further refined spatial accuracy. Holographic projections allow virtual objects to maintain realistic depth and orientation, enhancing user immersion. This is particularly important in applications such as design visualization, education, and interactive entertainment, where precision is critical for effective engagement.

Software Algorithms Behind AR Mechanics

The software layer in AR Mechanics orchestrates the interaction between hardware inputs and visual outputs. Computer vision algorithms detect surfaces, recognize objects, and track user gestures. Machine learning models can predict user intent and optimize virtual object behavior in real-time. Together, these algorithms ensure that augmented content behaves naturally within the physical environment.

Developers also integrate intelligent systems like Voice Enabled Chatbots to improve AR interactivity. By combining voice recognition with AR interfaces, users can navigate augmented spaces, issue commands, or retrieve contextual information without relying solely on manual controls. This integration highlights how AR Mechanics extends beyond visual overlays into multi-modal interactions, creating a richer and more intuitive user experience.

Applications in Business and Industry

Understanding AR Mechanics is not just a technical pursuit—it has practical business implications. Companies exploring AR in Business Operations leverage augmented solutions for training, maintenance, and customer engagement. For instance, warehouse management can benefit from AR-guided inventory navigation, while field technicians can access step-by-step augmented instructions during equipment repairs. The underlying mechanics ensure that AR interventions are accurate, reliable, and contextually meaningful.

Moreover, early AR Pioneers have demonstrated that effective AR integration requires careful consideration of both technological capabilities and human behavior. By aligning AR Mechanics with cognitive patterns and user ergonomics, businesses can maximize adoption and minimize friction.

Advanced Components Driving AR Mechanics

As AR Mechanics continues to mature, the complexity of its components grows. Beyond basic sensors and cameras, modern AR systems now rely on high-performance processors, sophisticated tracking software, and real-time rendering engines. These elements work together to ensure smooth, responsive, and immersive experiences for users across different environments and devices.

One critical advancement in AR Mechanics is the development of high-resolution depth sensing. Depth sensors allow AR devices to accurately gauge the distance and orientation of real-world objects, enabling virtual overlays to interact naturally with physical spaces. This capability is essential for applications like virtual interior design, collaborative 3D modeling, and interactive educational tools.

Real-Time Rendering and Visual Fidelity

Real-time rendering is a cornerstone of AR Mechanics. The rendering engine translates digital models into visual outputs that seamlessly blend with real-world imagery. High visual fidelity ensures that virtual objects appear lifelike, maintain proper perspective, and respond dynamically to user movements. Techniques such as occlusion handling and light adaptation are applied so that virtual elements interact realistically with the environment.

In parallel, AR Hologram Technology continues to enhance visual realism. Holographic displays project three-dimensional content into physical spaces, allowing users to perceive depth without specialized eyewear. The combination of real-time rendering and holographic projection empowers industries such as healthcare, architecture, and entertainment to create highly immersive experiences that feel tangible and interactive.

Gesture and Voice Interaction

Beyond visual immersion, modern AR Mechanics emphasizes natural user interaction. Gesture recognition allows users to manipulate virtual objects intuitively using hand movements, while eye-tracking technology adjusts content based on gaze direction. These interactive elements improve usability and reduce the learning curve for AR applications.

Additionally, integrating Voice Enabled Chatbots within AR interfaces is revolutionizing the way users interact with augmented content. Users can ask questions, navigate virtual menus, or execute commands through natural language, reducing dependency on touch or manual input. This integration demonstrates how AR Mechanics is evolving into a multi-sensory platform, combining visual, auditory, and gestural interactions for a seamless experience.

AR Mechanics in Collaborative Environments

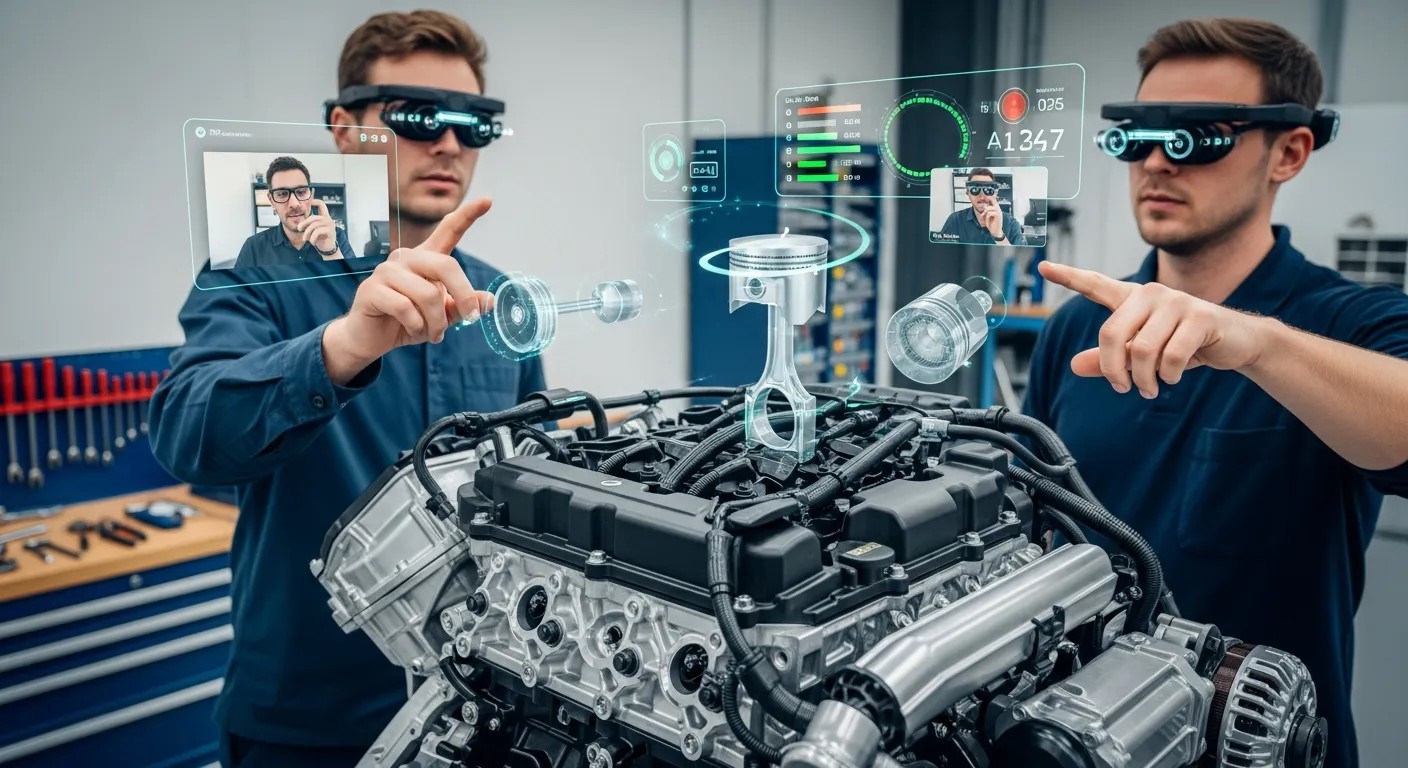

One of the most promising areas for AR Mechanics is collaborative environments. Teams spread across different locations can interact with shared augmented objects in real-time, enhancing communication and decision-making. For example, architects and engineers can review 3D models together in AR, making modifications visible instantaneously to all participants.

The inclusion of AI Multilingual Bots in these scenarios adds another layer of accessibility. By translating spoken or written commands in real-time, multilingual teams can collaborate more effectively, eliminating language barriers and improving workflow efficiency. Such innovations highlight how AR Mechanics is not only a technical marvel but also a powerful tool for human-centered collaboration and productivity.

Key Considerations for Developers

Developing effective AR experiences requires a thorough understanding of AR Mechanics principles. Developers must optimize performance for various hardware, account for environmental variability, and ensure intuitive user interactions. Real-world testing is crucial, as even minor inconsistencies in tracking or rendering can break immersion.

Furthermore, privacy and data security are increasingly important considerations. AR systems often rely on cameras and sensors to capture extensive environmental data. Ensuring secure handling of this information is critical, particularly in commercial applications where sensitive operational or personal data is involved.

Industry Applications Shaped by AR Mechanics

The transformative power of AR Mechanics is evident across multiple industries. From retail to healthcare, education to manufacturing, businesses are leveraging augmented reality to improve efficiency, engagement, and decision-making. By understanding how AR systems function, organizations can implement solutions that align with both operational goals and human behavior.

Healthcare and Medical Training

In healthcare, AR Mechanics has revolutionized training and patient care. Surgeons now use AR overlays to visualize anatomy during procedures, enhancing precision and reducing errors. Medical students benefit from interactive AR models that demonstrate complex physiological processes in 3D. The integration of AR Hologram Technology allows trainees to study virtual organs or simulate surgeries without risk, creating a safe yet highly realistic learning environment.

Retail and Customer Engagement

Retailers are also harnessing AR Mechanics to improve the shopping experience. Virtual try-ons, interactive product demonstrations, and AR-enhanced store layouts enable consumers to engage with products in ways that were impossible before. By combining intuitive interfaces with real-time data, businesses can create personalized experiences that resonate with customers, ultimately driving engagement and sales.

Manufacturing and Operational Efficiency

In manufacturing, AR Mechanics supports tasks like equipment maintenance, assembly guidance, and workflow optimization. AR overlays provide step-by-step instructions directly on machinery, reducing training time and minimizing errors. Companies implementing AR in Business Operations see measurable improvements in productivity, accuracy, and safety. For instance, AR-assisted maintenance allows technicians to identify and fix issues without consulting manuals or remote experts repeatedly, saving time and resources.

Education and Training

Education has also embraced AR Mechanics to enhance learning outcomes. Interactive AR lessons transform traditional classrooms into immersive environments, helping students visualize abstract concepts and retain information more effectively. Combined with Voice Enabled Chatbots, learners can ask questions, receive instant feedback, and navigate lessons in a personalized manner, creating an adaptive and engaging educational experience.

AR in Entertainment and Gaming

The entertainment sector leverages AR Mechanics to blend real-world and virtual experiences seamlessly. Live concerts, AR-enhanced theme parks, and interactive gaming experiences all rely on precise mechanics to maintain immersion. Early AR Pioneers demonstrated that achieving this level of engagement requires a careful balance of technology, design, and human psychology. Their innovations set the foundation for modern applications that are now ubiquitous across entertainment and media.

AR Mechanics for Collaborative Innovation

Collaboration is another area where AR Mechanics shines. Distributed teams can share AR workspaces to visualize prototypes, review designs, or conduct strategic planning. Tools integrating AI Multilingual Bots allow multilingual teams to interact without language barriers, fostering smoother communication and faster decision-making. These collaborative applications demonstrate that the mechanics of AR extend beyond visualization, enabling actionable insights and real-time cooperation across borders.

Key Challenges and Considerations

Despite its promise, mastering AR Mechanics comes with challenges. Accurate tracking, latency issues, and device compatibility remain technical hurdles. Developers must also consider ergonomics and user fatigue, particularly in wearable AR devices. Ethical considerations, such as privacy and data security, are paramount, as AR systems frequently capture environmental and personal data.

By addressing these challenges, organizations can leverage AR Mechanics to deliver innovative solutions that are both technologically advanced and user-friendly. Strategic planning, real-world testing, and continuous optimization are essential to achieving meaningful results in any industry.

Future Trends and Innovations in AR Mechanics

As AR Mechanics evolves, the horizon of possibilities continues to expand. Emerging technologies, enhanced algorithms, and integration with artificial intelligence are pushing the boundaries of what augmented reality can achieve. Companies and innovators are now exploring ways to merge digital content more seamlessly with the real world, creating experiences that are both functional and emotionally engaging.

The Rise of Smart AR Interfaces

One significant trend in AR Mechanics is the development of smarter, more intuitive interfaces. Gesture recognition, eye tracking, and voice interaction are becoming standard features, allowing users to interact with AR content naturally. The integration of Voice Enabled Chatbots ensures that navigation and information retrieval are frictionless, offering a more immersive experience that feels almost like interacting with another person rather than a machine.

These interfaces are also being enhanced with predictive AI, which anticipates user behavior and adjusts virtual content dynamically. For example, an AR app in retail may automatically suggest products or overlay relevant information based on user actions, creating a personalized and context-aware experience.

AR in Multilingual and Global Environments

The incorporation of AI Multilingual Bots in AR applications is transforming global collaboration and accessibility. Users from different linguistic backgrounds can interact seamlessly with augmented interfaces, breaking down communication barriers and enabling more inclusive experiences. This capability is especially valuable in industries like education, healthcare, and multinational business operations, where real-time understanding is critical.

Blending AR and VR: Understanding AR vs VR

While AR and VR are often discussed together, understanding the differences is crucial for both developers and users. AR vs VR is not just a technological distinction—it represents different approaches to human experience. VR immerses users in fully digital environments, cutting them off from the real world, whereas AR overlays digital elements while maintaining a connection with physical surroundings.

Modern applications increasingly combine aspects of both, creating hybrid experiences that leverage the strengths of each technology. This “mixed reality” approach allows organizations to design more versatile and engaging solutions, ranging from virtual training simulations to interactive entertainment experiences.

Holographic Advancements and Realism

Another cutting-edge trend in AR Mechanics is the advancement of AR Hologram Technology. Holographic projections enhance depth perception and spatial realism, making virtual objects appear tangible and interactive. Industries such as architecture, medicine, and manufacturing benefit immensely from this technology, as it allows professionals to analyze complex structures, simulate procedures, or visualize prototypes with unprecedented clarity.

The combination of holographic projection, real-time rendering, and intelligent AR software creates immersive experiences that were once considered science fiction. This convergence of technologies reflects how the mechanics behind AR are continuously evolving to meet both human and operational demands.

Preparing for the Next Generation of AR

The future of AR Mechanics lies not only in technological refinement but also in understanding human interaction with digital content. User-centric design, ethical considerations, and seamless integration into daily life are as important as hardware and software advancements. Early adoption by AR Pioneers has paved the way, but widespread impact will depend on accessibility, cost-efficiency, and intuitive experiences that resonate with a broad audience.

Emerging trends suggest a future where AR is integrated into everyday devices, workplaces, and entertainment platforms, bridging the gap between digital and physical realities in ways that are both functional and emotionally engaging. Companies that master these mechanics early will be positioned as leaders in the next wave of augmented experiences.

AR Mechanics in Device Ecosystems

The effectiveness of AR Mechanics depends heavily on the ecosystem of devices that support it. Smartphones, tablets, wearable AR glasses, and head-mounted displays all offer unique capabilities, from motion tracking to high-resolution rendering. Integration across these devices ensures that augmented experiences are consistent, responsive, and immersive. Developers must consider hardware limitations, battery life, and sensor accuracy when designing AR applications. Emerging ecosystems also prioritize interoperability, allowing users to transition seamlessly between devices without losing context or functionality. By optimizing AR content for multiple platforms, organizations can maximize reach and usability. This interconnected device ecosystem is central to the practical implementation and adoption of AR Mechanics in real-world scenarios.

Conclusion

Understanding AR Mechanics is essential for leveraging augmented reality’s transformative potential. By combining advanced sensors, real-time rendering, gesture recognition, and AI-driven interfaces, AR seamlessly merges digital content with the physical world. Businesses, educators, and innovators benefit from improved engagement, operational efficiency, and immersive learning. Emerging trends, including holographic technology, multilingual AI bots, and hybrid AR-VR experiences, indicate a future where AR becomes an integral part of daily life. Strategic implementation of these mechanics ensures both technological innovation and user-centric design, paving the way for sustainable adoption and meaningful real-world impact across multiple sectors.

Frequently Asked Questions (FAQ)

What is AR Mechanics?

AR Mechanics refers to the systems, hardware, and software processes that enable augmented reality to overlay digital content on the real world.

How is AR different from VR?

While VR immerses users in a fully digital environment, AR overlays digital elements while keeping the real world visible and interactive.

Where is AR used in business?

AR is applied in training, maintenance, product visualization, marketing, and operational optimization, improving efficiency and engagement.

Can AR support multilingual collaboration?

Yes, AI Multilingual Bots integrated into AR platforms allow real-time translation, enabling global teams to collaborate seamlessly.

What are the future trends in AR?

Trends include holographic projections, hybrid AR-VR experiences, AI-driven interfaces, and device ecosystem integration for seamless immersive experiences.